Computer Vision for Automated Facial Characteristics Detection

DOI:

https://doi.org/10.51173/eetj.v1i1.5Keywords:

Pterygium, Deep Learning, Xception, ViT, VGG16, Gender Recognition, Facial Characteristics, Bell's ParalysisAbstract

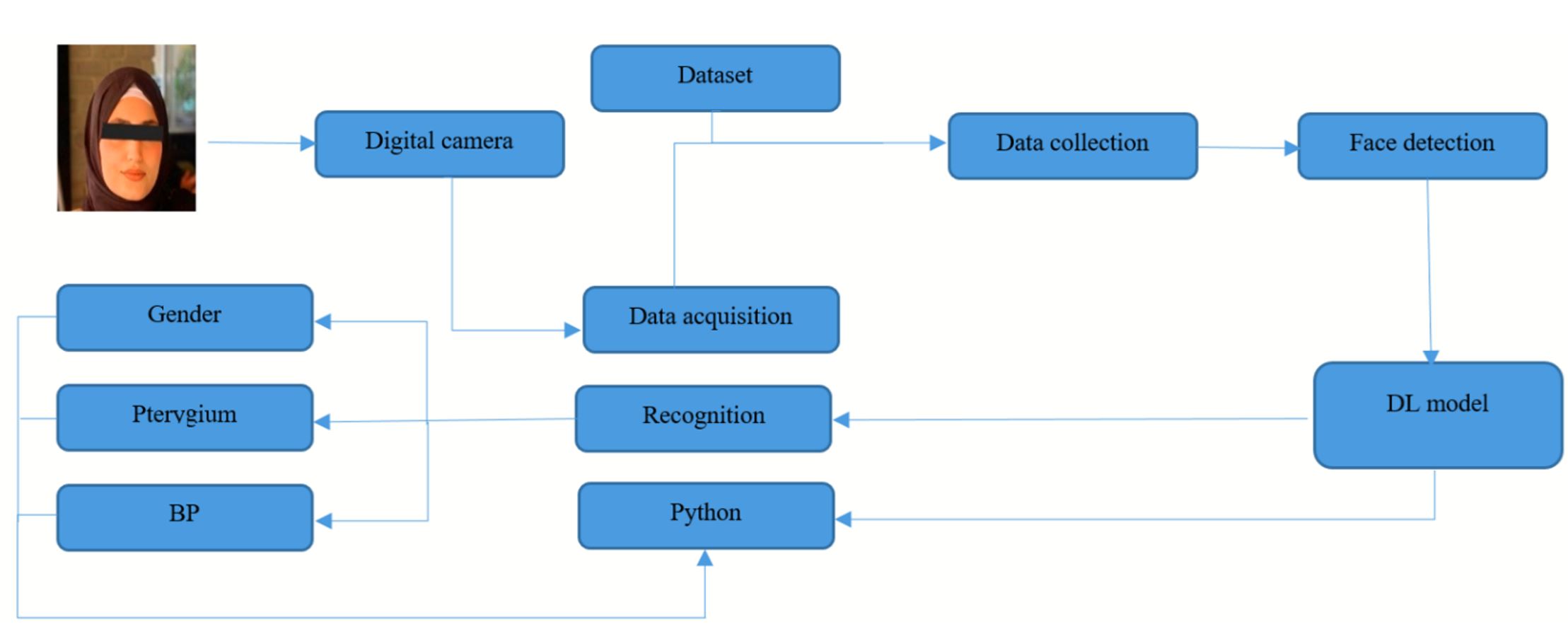

Diagnosing diseases in their early stages allows people to see a specialist doctor before the disease reaches advanced stages and avoid any future complications. The use of a real-time imaging system to reliably give various information about the patient's condition, including gender classification, pterygium, and Bell's paralysis, contributes to reducing the duration of diagnosis and human errors. This study focuses on the use of three artificial intelligence algorithms based on deep learning, namely Visual Geometry Group (VGG16), Vision Transformer (ViT), and Xception, and evaluates their performance in detecting gender, pterygium, and Bell's paralysis. VIT has the highest overall performance results from the rest of the algorithms.

References

E. Salem, M. Hassaballah, M. M. Mahmoud, and A.-M. M. Ali, "Facial features detection: a comparative study," in The international conference on artificial intelligence and computer vision, 2021, pp. 402-412: Springer. doi: 10.1007/978-3-030-76346-6_37

J. Qiang, D. Wu, H. Du, H. Zhu, S. Chen, and H. Pan, "Review on facial-recognition-based applications in disease diagnosis," Bioengineering, vol. 9, no. 7, p. 273, 2022. doi: 10.3390/bioengineering9070273

S. A. Mansukhani et al., "Incidence and distribution of ocular disorders in the first year of life," vol. 27, no. 2, pp. 80. e1-80. e5, 2023. doi: 10.1016/j.jaapos.2023.02.002

J. Rajangam et al., "Bell Palsy: Facts and Current Research Perspectives," vol. 23, no. 2, pp. 203-214, 2024. doi: 10.2174/1871527322666230321120618

S. S. Ahamed, J. Jabez, and M. Prithiviraj, "Emotion Detection using Speech and Face in Deep Learning," in 2023 International Conference on Sustainable Computing and Smart Systems (ICSCSS), 2023, pp. 317-324: IEEE. doi: 10.1109/ICSCSS57650.2023.10169784

S. Colaco, Y. J. Yoon, and D. S. Han, "UIRNet: Facial Landmarks Detection Model with Symmetric Encoder-Decoder," in 2022 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), 2022, pp. 407-410: IEEE. doi: 10.1109/ICAIIC54071.2022.9722657

D. Mamieva, A. B. Abdusalomov, M. Mukhiddinov, and T. K. J. S. Whangbo, "Improved face detection method via learning small faces on hard images based on a deep learning approach," vol. 23, no. 1, p. 502, 2023. doi: 10.3390/s23010502

K. Takahashi, "The resolution of immunofluorescent pathological images affects diagnosis for not only artificial intelligence but also human," 2022. doi: 10.34172/jnp.2021.26

S. Kumar, S. Singh, and J. Kumar, "Gender classification using machine learning with multi-feature method," in 2019 IEEE 9th Annual Computing and Communication Workshop and Conference (CCWC), 2019, pp. 0648-0653: IEEE. doi: 10.1109/CCWC.2019.8666601

A. Greco, A. Saggese, and M. Vento, "Digital signage by real-time gender recognition from face images," in 2020 IEEE International Workshop on Metrology for Industry 4.0 & IoT, 2020, pp. 309-313: IEEE. doi: 10.1109/MetroInd4.0IoT48571.2020.9138194

A. Tursunov, Mustaqeem, J. Y. Choeh, and S. J. S. Kwon, "Age and gender recognition using a convolutional neural network with a specially designed multi-attention module through speech spectrograms," vol. 21, no. 17, p. 5892, 2021. doi: 10.3390/s21175892

H. Feng, "Face-based gender recognition with small samples generated by DCGAN using CNN," in Fifth International Conference on Computer Information Science and Artificial Intelligence (CISAI 2022), 2023, vol. 12566, pp. 634-640: SPIE. doi: 10.1117/12.2668011

V. S. Kumar, N. B. Reddy, and U. Kiirran, "Predictive Analytics on Gender Classification using Machine Learning," in 2023 10th International Conference on Computing for Sustainable Global Development (INDIACom), 2023, pp. 1634-1639: IEEE

W. M. D. W. Zaki, M. M. Daud, S. R. Abdani, A. Hussain, H. A. J. C. m. Mutalib, and p. i. biomedicine, "Automated pterygium detection method of anterior segment photographed images," vol. 154, pp. 71-78, 2018. doi: 10.1016/j.cmpb.2017.10.026

M. A. Zulkifley, S. R. Abdani, N. H. J. M. T. Zulkifley, and Applications, "Pterygium-Net: a deep learning approach to pterygium detection and localization," vol. 78, pp. 34563-34584, 2019. doi: 10.1007/s11042-019-08130-x

H. M. Ahmad and S. R. Hameed, "Eye diseases classification using hierarchical MultiLabel artificial neural network," in 2020 1st. Information Technology To Enhance e-learning and Other Application (IT-ELA, 2020, pp. 93-98: IEEE. doi: 10.1109/IT-ELA50150.2020.9253120

A. Song, Z. Wu, X. Ding, Q. Hu, and X. J. F. I. Di, "Neurologist standard classification of facial nerve paralysis with deep neural networks," vol. 10, no. 11, p. 111, 2018. doi: 10.3390/fi10110111

T. Wang, S. Zhang, L. A. Liu, G. Wu, and J. J. I. A. Dong, "Automatic facial paralysis evaluation augmented by a cascaded encoder network structure," vol. 7, pp. 135621-135631, 2019. doi: 10.1109/ACCESS.2019.2942143

Y. Liu, Z. Xu, L. Ding, J. Jia, and X. Wu, "Automatic assessment of facial paralysis based on facial landmarks," in 2021 IEEE 2nd International Conference on Pattern Recognition and Machine Learning (PRML), 2021, pp. 162-167: IEEE. doi: 10.1109/PRML52754.2021.9520746

G. S. Parra-Dominguez, C. H. Garcia-Capulin, and R. E. J. D. Sanchez-Yanez, "Automatic facial palsy diagnosis as a classification problem using regional information extracted from a photograph," vol. 12, no. 7, p. 1528, 2022. doi: 10.3390/diagnostics12071528

A. S. Amsalam, A. Al-Naji, A. Y. Daeef, and J. J. J. o. T. Chahl, "Computer Vision System for Facial Palsy Detection," vol. 5, no. 1, pp. 44-51, 2023. doi: 10.51173/jt.v5i1.1133

J. Barbosa, W.-K. Seo, and J. J. B. M. I. Kang, "paraFaceTest: an ensemble of regression tree-based facial features extraction for efficient facial paralysis classification," vol. 19, no. 1, pp. 1-14, 2019. doi: 10.1186/s12880-019-0330-8

M. Grandini, E. Bagli, and G. J. a. p. a. Visani, "Metrics for multi-class classification: an overview," 2020. doi: 10.48550/arXiv.2008.05756